4 Introduction

4.1 Computer Graphics

Computer graphics is the technology concerned with creating, manipulating, and displaying visual content using computers. It encompasses both the creation of images from mathematical models (rendering) and the processing of images captured by devices such as cameras or scanners. At its core, computer graphics involves:

- 2D and 3D Modeling: Crafting objects and scenes using geometric shapes.

- Rendering: Converting models into images using algorithms that simulate light, shadow, and texture.

- Animation: Bringing models to life through movement and changes over time.

Computer Graphics revolves around the representation of images digitally.

4.2 Images

Images are at the heart of computer graphics, serving as the primary medium through which visual information is communicated. They are composed of tiny elements called pixels, each representing a specific color and intensity.

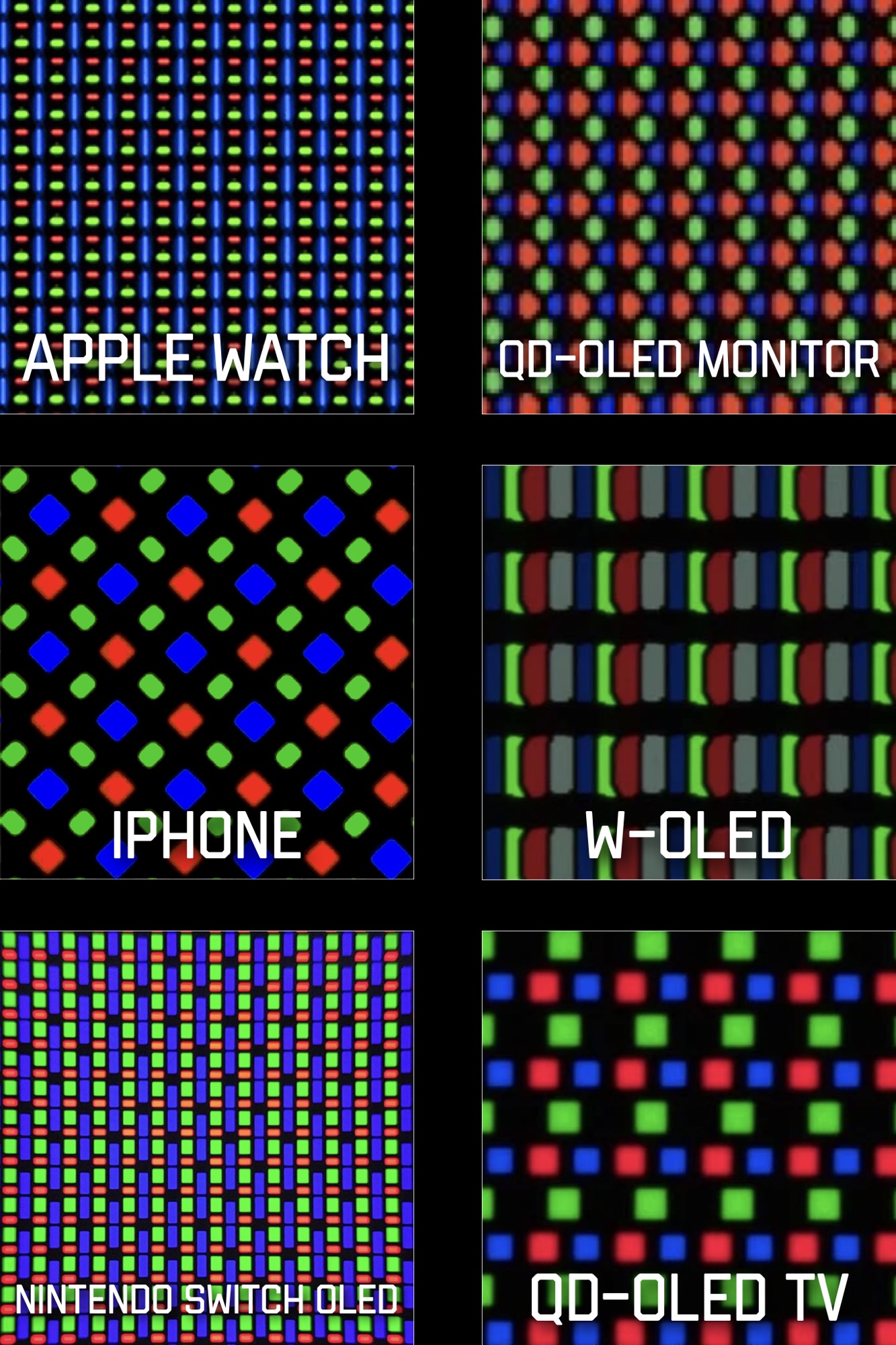

4.2.1 Pixels

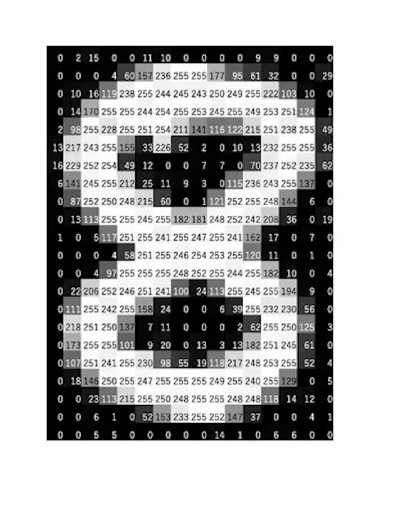

Pixels, short for “picture elements,” are the smallest units of an image that can be individually processed and displayed on a screen. Each pixel carries information about its color and brightness, and collectively, pixels form the images we see on digital devices. Here is an example of pixel art:

![]()

Dependant on a the colour space, images are represented by matrices where at each point (pixel) in a grid there is an associated value. For example, an image with dimensions 𝑀×𝑁 will be represented as a matrix with 𝑀 rows and 𝑁 columns. \[ \begin{bmatrix} 0 & 255 & 128 & \cdots \\ 255 & 128 & 0 & \cdots \\ 128 & 0 & 255 & \cdots \\ \vdots & \vdots & \vdots & \ddots \end{bmatrix} \]

4.2.2 Colour Spaces

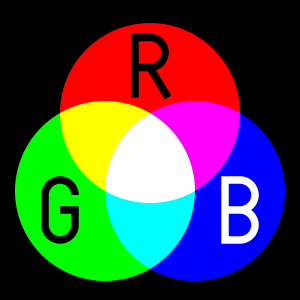

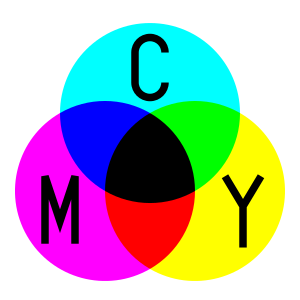

Colour spaces define the range of colors that can be represented in an image. Common colour spaces include RGB (Red, Green, Blue), CMYK (Cyan, Magenta, Yellow, Black), and HSL (Hue, Saturation, Lightness). Each space has its unique applications and characteristics:

- RGB: Used for digital screens, combining red, green, and blue light to produce various colors. (Additive Colour Space)

- CMYK: Used in printing, combining cyan, magenta, yellow, and black inks. (Subtractive Colour Space)

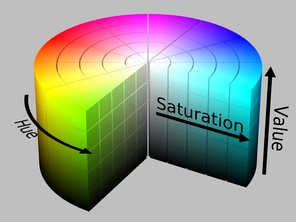

- HSL: Defines colors in terms of hue, saturation, and lightness, offering an intuitive way to manipulate colors.

- HSV: Defines colors in terms of hue, saturation, and value, and is also often called HSB (B for brightness).

- Grayscale: Defines colour in terms of a single value representing the amount of light or intensity of a pixel.

HSL and HSV are the two most common cylindrical-coordinate representations of points in an RGB color model. The two representations rearrange the geometry of RGB in an attempt to be more intuitive and perceptually relevant than the cartesian (cube) representation. These spaces tend to be better for Image recognition and segmentation tasks commonly tackled in machine learning. In the RGB color space, changes in lighting can significantly affect the red, green, and blue components, making it challenging to recognize objects consistently under different lighting conditions. In HSV and HSL, the hue component is less affected by changes in light intensity, making these color spaces more robust to lighting variations. This consistency is crucial for reliable image recognition. Features extracted from the HSV or HSL color spaces are often more discriminative for certain tasks, such as segmentation and object detection. For instance, in tasks like skin color detection, the hue and saturation components are particularly useful, as skin tones can vary significantly in RGB values but occupy a narrower range in HSV or HSL.

4.2.3 Raster vs Vector

Raster and vector graphics are two primary types of image representations:

- Raster Graphics: Made up of pixels, suitable for complex images with fine details like photographs.

- Vector Graphics: Composed of paths defined by mathematical equations, ideal for images requiring scalability without loss of quality, such as logos and illustrations.

![]()

GIF, PNG, JPEG, and WebP are basically raster graphics formats; an image is specified by storing a color value for each pixel. SVG, on the other hand, is fundamentally a vector graphics format (although SVG images can include raster images). SVG is actually an XML-based language for describing two-dimensional vector graphics images. “SVG” stands for “Scalable Vector Graphics,” and the term “scalable” indicates one of the advantages of vector graphics: There is no loss of quality when the size of the image is increased.

4.2.4 Painting vs Drawing

In computer graphics, painting and drawing are techniques used to create images:

- Painting: Involves applying colors to a canvas using digital brushes, mimicking traditional painting methods.

- Drawing: Focuses on creating images using lines and shapes, often resulting in cleaner and more precise graphics.

4.2.5 Compression

Image compression reduces the file size of images to save storage space and improve transmission speed. There are two main types:

- Lossy Compression: Reduces file size by removing some image data, which can result in a loss of quality (e.g., JPEG).

- Lossless Compression: Reduces file size without any loss of quality (e.g., PNG).

4.3 Models

In computer graphics, models are the fundamental building blocks used to create and represent objects and scenes. These models are mathematical representations of three-dimensional objects, encompassing their geometry, appearance, and behaviour.

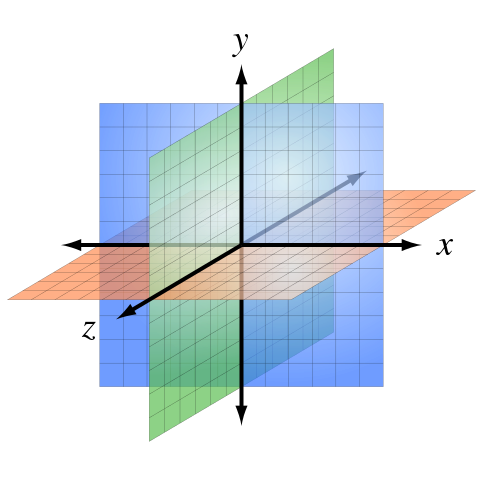

4.3.1 3D Space (World)

The 3D space, or world, is the virtual environment in which models exist. It is defined by a coordinate system that specifies the position and orientation of objects.

4.3.1.1 Coordinate Systems Coordinate systems are used to locate points in space.

The most common system in computer graphics is the Cartesian coordinate system, which uses x, y, and z axes to define positions in 3D space.

- X-Axis: Represents the horizontal direction.

- Y-Axis: Represents the vertical direction.

- Z-Axis: Represents the depth direction (coming out of or going into the plane).

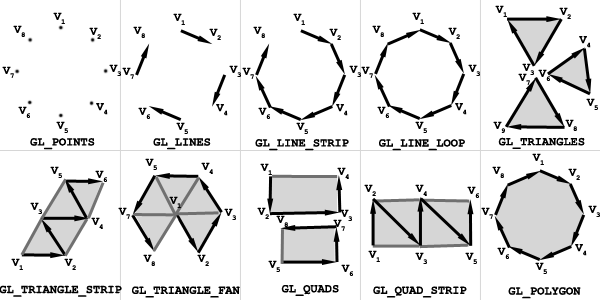

4.3.2 Geometric Modelling

The process of creating a scene by specifying the geometric objects contained in the scene, together with geometric transforms to be applied to them and attributes that determine their appearance. The starting point is to construct an “artificial 3D world” as a collection of simple geometric shapes, arranged in three-dimensional space. These simple shapes are often called primitives or are made up of primitives.

4.3.2.1 Primitives

Primitives are the basic building blocks of 3D models, such as points, lines, and polygons. Common primitives include:

-

Points: The simplest form of a 3D object, representing a single location in space.

- Vertices: The basic building blocks of a 3D model. A vertex is a point in 3D space defined by its coordinates (x, y, z).

- Lines: Connect two points and are used to create edges.

- Edges: Line segments connecting two vertices.

- Polygons: Flat shapes with three or more sides, used to construct the surfaces of 3D objects.

- Faces: Flat surfaces bounded by edges, typically triangles or quadrilaterals. A collection of faces forms the surface of the 3D model.

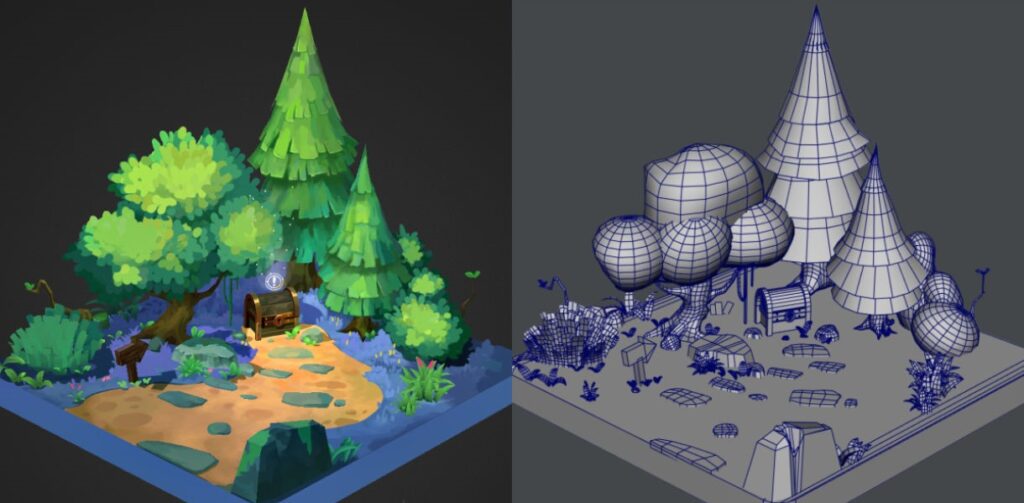

4.3.3 Meshes

A collection of vertices, edges, and faces that defines the shape of a 3D object. Meshes are used to approximate complex shapes using simpler geometric primitives. The detail and quality of a model are enhanced by the complexity of its mesh. However, this increased detail requires defining a greater number of vertices, which in turn demands more processing power and memory, impacting performance.

Level of Detail (LOD) is a technique used in computer graphics, particularly in games and movies, to manage the complexity and detail of 3D models and scenes. The primary goal of LOD is to optimize performance and resource usage while maintaining visual fidelity, especially when dealing with high-resolution assets that can be computationally expensive to render.

In video games, LOD is crucial for ensuring smooth and efficient rendering as players navigate through dynamic and complex environments. Here’s how it works:

Multiple Detail Levels: Each 3D model is created in several versions, each with a different level of detail. For example, a character model might have a high-detail version with thousands of polygons and a low-detail version with significantly fewer polygons.

Distance-Based Switching: The game engine dynamically switches between these versions based on the player’s distance from the object. When the player is close to the object, the high-detail model is used. As the player moves further away, the engine progressively uses lower-detail models

4.3.4 Geometric Transformations

Once an object is created we need a method by which we change it, and have it interact in a scene.

Geometric transformations are operations that modify the position, orientation, and scale of models. Common transformations include:

- Translation: Moving an object from one location to another.

- Rotation: Spinning an object around an axis.

- Scaling: Changing the size of an object.

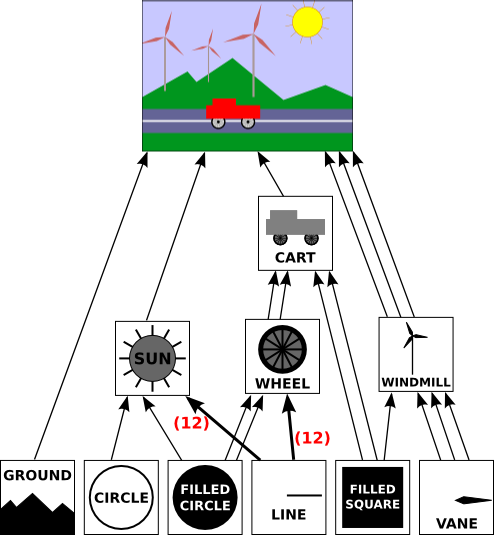

4.3.5 Hierarchical Modelling

A complex scene can contain a large number of primitives, and it would be very difficult to create the scene by giving explicit coordinates for each individual primitive. The solution, as any programmer should immediately guess, is to chunk together primitives into reusable components. For example, for a scene that contains several automobiles, we might create a geometric model of a wheel. An automobile can be modeled as four wheels together with models of other components. And we could then use several copies of the automobile model in the scene. Note that once a geometric model has been designed, it can be used as a component in more complex models.

Hierarchical modelling organizes complex models into a hierarchy of simpler components. This approach allows for easier manipulation and animation of models, as changes to parent components automatically affect their children. In the context of a complete scene, hierarchical modeling allows for the efficient reuse of assets. Instead of constructing each individual windmill from basic geometric primitives, you can create functions to build the constituent parts (such as the blades, tower, and base) and then a function to assemble these parts into a complete windmill. Once you have this windmill function, you can easily reuse it throughout your scene.

4.4 Rendering

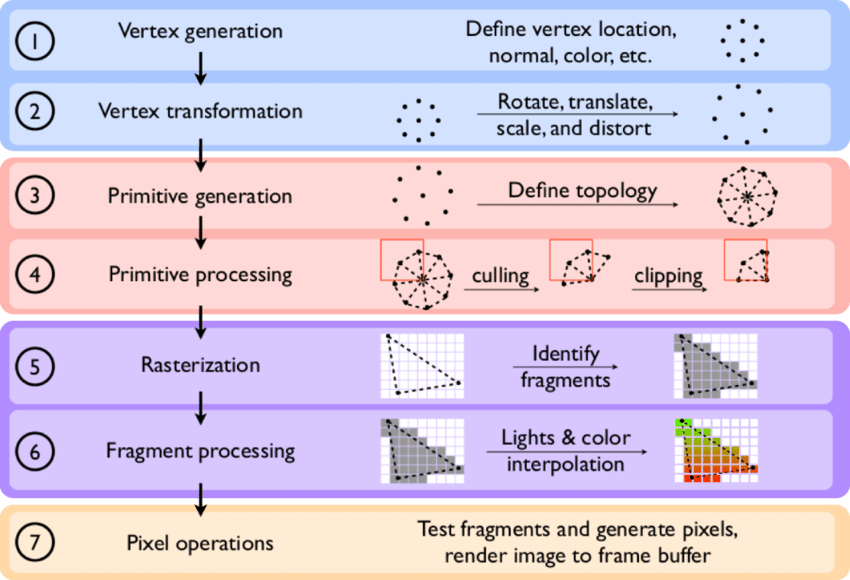

In general, the ultimate goal of 3D graphics is to produce 2D images of the 3D world. Rendering is a process in computer graphics, responsible for generating the final visual output from 2D and 3D models. It involves the application of various algorithms and techniques to simulate the appearance of objects, taking into account factors like lighting, shading, texture, and perspective. Though the technical details of rendering methods vary, the general challenges to overcome are handled by the graphics (rendering) pipeline in a rendering device such as a GPU.

4.4.1 Rendering Pipeline

The rendering pipeline is a sequence of steps that transform 3D models into 2D images. Key stages include:

- Vertex Processing: Transforming vertices to their final positions.

- Rasterization: Converting geometric data into pixels.

- Fragment Processing: Applying shading and texturing to pixels.

- Output Merging: Combining pixels to produce the final image.

4.4.2 Colour/Material

Colour and material properties define the appearance of 3D models. Materials can simulate different surfaces, such as metal, wood, or glass, by adjusting properties like reflectivity, transparency, and texture.

Materials control how light interacts with the surface of the model.

4.4.3 Texture

Textures are images applied to the surfaces of 3D models to add detail and realism. They can represent various surface properties, such as colour, bumpiness, or reflectivity. You can think of a texture image as though it were printed on a rubber sheet that is stretched and pinned onto the mesh at appropriate positions. Textures are also a major source of memory consumption in all renders. Ideally we want highest resolution textures in order to achieve photo realism, however these textures become very large. In chapter 10 we look at some machine learning approaches that have been applied to aid performance of high-resolution texture rendering (namely Super Resolution and DLSS).

Lastly, textures can be generated at run-time using procedural generation techniques. This approach is used to generate dynamic clouds, streams of lava or waterfalls.

Textures add fine details through the application of another image to the surface, such as patterns, logos, or imperfections, making the material look more realistic.

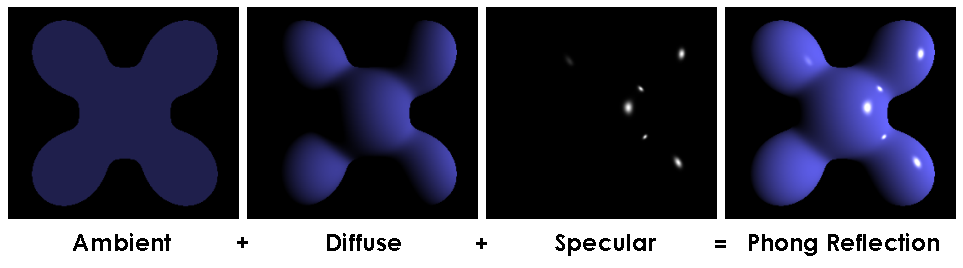

4.4.4 Lighting

Lighting is crucial for creating realistic images. It involves simulating the interaction of light with objects to produce effects like shadows, reflections, and highlights. Common lighting techniques include:

- Ambient Lighting: Provides a base level of light to all objects.

- Diffuse Lighting: Simulates light scattering in many directions.

- Specular Lighting: Creates highlights on shiny surfaces.

4.4.5 Shaders

Shaders are small programs that run on the GPU, controlling how vertices and pixels are processed. They enable advanced effects like realistic lighting, shadows, and surface details. Common types include:

- Vertex Shaders: Process vertex data.

- Fragment Shaders: Process pixel data.

- Geometry Shaders: Generate new geometry on the fly.

4.4.6 Animation

Animation brings models to life by changing their properties over time. Techniques include keyframe animation, where important positions (keyframes) are defined, and the computer interpolates the frames in between, and procedural animation, where movements are generated through algorithms.

4.5 Hardware and Software

Computer graphics rely on specialized hardware and software to achieve their goals.

4.5.1 Hardware

Key hardware components include:

- Graphics Processing Unit (GPU): Specialized for rendering images quickly and efficiently.

- Display Devices: Monitors and screens that show the final output.

4.5.1.1 GPU

GPUs provide the computational power to perform thousands of calculations in parallel as demonstrated in the video below.

4.5.1.2 Display Devices

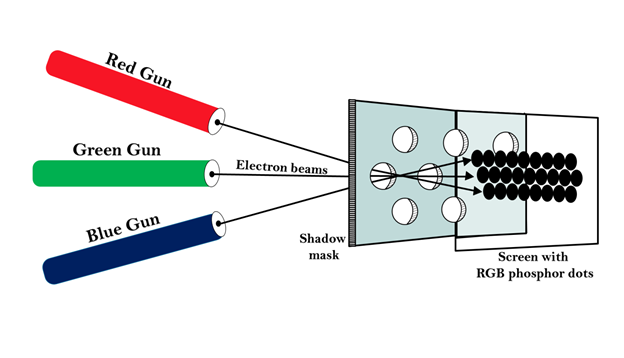

CRT (Cathode Ray Tube) Monitors : CRT monitors use electron beams to illuminate phosphors on the inside of a glass screen. The beams are directed by magnetic fields to specific points on the screen. Very good colour accuracy and low motion blur. However, was very bulky and consumed a lot of power.

The video “How a TV Works in Slow Motion” by The Slow Mo Guys provides a fascinating exploration of how traditional CRT (Cathode Ray Tube) televisions display images by using high-speed cameras to capture the process in incredible detail.

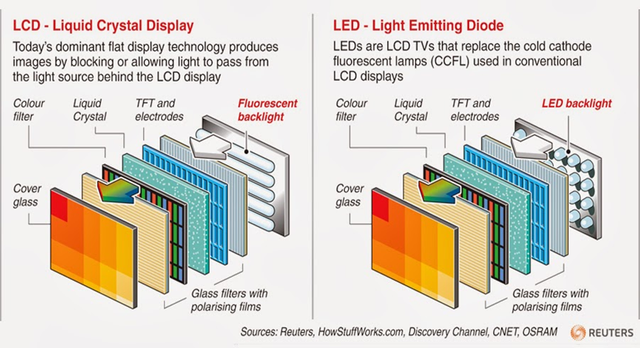

LCD (Liquid Crystal Display) Monitors : LCDs use liquid crystals sandwiched between two layers of glass or plastic. When an electric current passes through, it changes the orientation of the crystals, modulating light to produce images. Slim and consumes less power, however suffers from limited viewing angles and motion blur.

LED (Light Emitting Diode) Monitors : Essentially a type of LCD monitor, but uses LEDs for backlighting instead of fluorescent lights. There are two main types: edge-lit and full-array LED. Improved color accuracy and contrast

4.5.2 Software

Software tools used in computer graphics range from simple drawing programs to complex 3D modelling and animation suites. Popular software includes:

- Adobe Photoshop: For 2D image editing.

- Blender: An open-source 3D modelling and animation tool.

- Autodesk Maya: A professional 3D modelling and animation software.

- Graphics Rendering API’s: DirectX, OpenGL, and Vulkan are all software frameworks for rendering graphics and handling multimedia tasks.

4.6 History of Computer Graphics (Supplementary)

4.6.1 1950s: The Beginnings

- Early Developments: The origins of computer graphics can be traced back to the 1950s when computers were primarily used for scientific and military applications. Early visual outputs were simple and often consisted of text and basic plots displayed on cathode-ray tube (CRT) monitors.

- Whirlwind Computer: Developed at MIT, the Whirlwind was one of the first computers capable of real-time text and graphics display on a CRT screen.

4.6.2 1960s: Foundations and First Steps

- Sketchpad (1963): Created by Ivan Sutherland at MIT, Sketchpad was a groundbreaking graphical computer-aided design system that allowed users to interact with the computer graphically. It is often considered the ancestor of modern computer graphics and CAD systems.

- IBM 2250: One of the first computer graphics terminals, introduced in the mid-1960s, allowed for the display and manipulation of graphical data.

- Early Research: Universities and research institutions began exploring computer graphics, leading to the development of algorithms for line drawing, clipping, and the representation of 3D objects.

4.6.3 1970s: The Emergence of 3D Graphics

- Hidden Surface Removal: Algorithms for hidden surface determination were developed, enabling more realistic 3D graphics by accurately displaying which surfaces should be visible.

- Shading Models: Gouraud shading and Phong shading were developed, improving the realism of 3D models by simulating the effects of light and shadow.

- Raster Graphics: The transition from vector graphics to raster graphics (pixel-based) began, allowing for more detailed and complex images.

- Movies and Games: Early computer-generated imagery (CGI) was used in films like “Westworld” (1973), and video games like “Pong” (1972) and “Space Invaders” (1978) showcased the potential of computer graphics in entertainment.

4.6.4 1980s: Commercialization and Advancement

- Personal Computers: The advent of personal computers like the Apple II, Commodore 64, and IBM PC brought computer graphics to a broader audience. Graphics capabilities in these machines improved rapidly.

- Graphics Standards: The development of graphics standards like GKS (Graphical Kernel System) and PHIGS (Programmer’s Hierarchical Interactive Graphics System) facilitated the creation of interoperable graphics applications.

- Software Development: Software like AutoCAD (1982) revolutionized computer-aided design, while animation software such as Pixar’s RenderMan began to make waves in the film industry.

- Movies: “Tron” (1982) and “The Last Starfighter” (1984) featured extensive use of CGI, pushing the boundaries of what was possible in film.

4.6.5 1990s: Realism and Interactivity

- 3D Graphics Hardware: The introduction of dedicated graphics cards, such as those by NVIDIA and ATI, significantly boosted the performance and capabilities of personal computers in rendering 3D graphics.

- OpenGL and DirectX: Graphics APIs like OpenGL (1992) and DirectX (1995) standardized and simplified the development of graphics applications.

- Video Games: Games like “Doom” (1993) and “Quake” (1996) set new standards for real-time 3D graphics in gaming, leveraging advancements in hardware and software.

- Movies: Films like “Jurassic Park” (1993) and “Toy Story” (1995) demonstrated the incredible potential of CGI, with “Toy Story” being the first entirely computer-animated feature film.

The much-anticipated follow-up to Doom, Quake was one of the first games to make use of fully polygonal graphics and hardware acceleration, and is often credited as the point of origin for many lasting trends in the FPS genre, such as standardized mouse look and the WASD control scheme.

https://playclassic.games/games/first-person-shooter-dos-games-online/play-quake-online/

4.6.6 2000s: High-Definition and Real-Time Graphics

- High-Definition Graphics: The shift to HD graphics in both gaming and movies brought about unprecedented levels of detail and realism.

- Shaders and GPU Programming: The introduction of programmable shaders and advances in GPU architecture enabled more complex and realistic visual effects.

- Motion Capture: Used extensively in films like “The Lord of the Rings” trilogy (2001-2003) and “Avatar” (2009), motion capture technology captured realistic human movement for integration into CGI.

- Real-Time Rendering: Real-time graphics achieved new heights with games like “Half-Life 2” (2004) and “Crysis” (2007), showcasing lifelike environments and characters.

4.6.7 2010s to Present: Photorealism and Beyond

- Photorealistic Rendering: Ray tracing, a rendering technique that simulates the physical behavior of light, became more feasible for real-time applications with advancements in GPU technology, notably with NVIDIA’s RTX series.

- Virtual Reality (VR) and Augmented Reality (AR): VR and AR technologies gained popularity, creating immersive experiences in gaming, training, and other applications.

- Movies and Special Effects: CGI continued to evolve, with films like “Avengers: Endgame” (2019) using extensive computer graphics for both visual effects and fully CGI characters.

- Artificial Intelligence: AI and machine learning began to play significant roles in graphics, from procedural content generation to enhanced image processing and super-resolution techniques.

4.6.8 Conclusion

From simple line drawings on CRT monitors to immersive virtual worlds and photorealistic imagery, the history of computer graphics is a testament to the rapid pace of technological innovation. Today, computer graphics are an integral part of numerous industries, including entertainment, design, education, and scientific visualization, continuing to push the boundaries of what is visually possible.